Jörg Simon Wicker

Senior Lecturer | CEO | Founder

My research is both in applied and non-applied machine learning. Currently, I am particularly interested in reliability of machine learning algorithms, adversarial machine learning, and bias, with applications in chemistry and environmental science. For more information about my current research, please check our lab webpage.

Co-founder and CEO of enviPath Limited, a university spin-out around the enviPath system and building AI solutions for chemistry. It builds on more than 15 years of research in the area. We employ a team of experts in AI, Machine Learning, Chemistry, and Software Engineering.

Co-founder and CEO of enviPath Limited, a university spin-out around the enviPath system and building AI solutions for chemistry. It builds on more than 15 years of research in the area. We employ a team of experts in AI, Machine Learning, Chemistry, and Software Engineering.

Senior Lecturer at the School of Computer Science of the University of Auckland. Check out my lab page for more details. We are always looking for students interested in joining our lab.

Senior Lecturer at the School of Computer Science of the University of Auckland. Check out my lab page for more details. We are always looking for students interested in joining our lab.

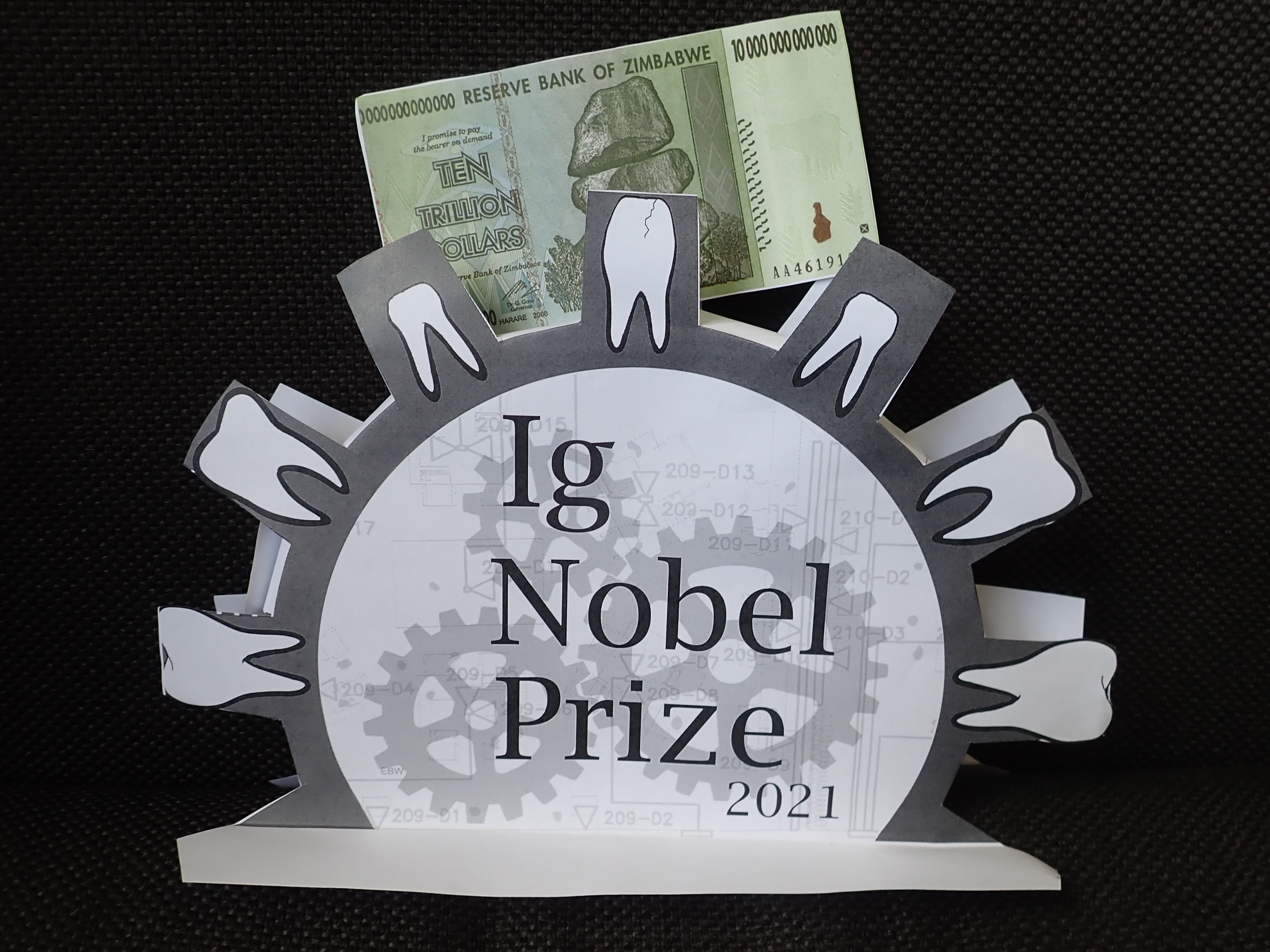

Winner of the Ig Nobel Prize in Chemistry in 2021, for chemically analyzing the air inside movie theaters, to test whether the odors produced by an audience reliably indicate the levels of violence, sex, antisocial behavior, drug use, and bad language in the movie the audience is watching [26, 49, 53, 70, 71].

Winner of the Ig Nobel Prize in Chemistry in 2021, for chemically analyzing the air inside movie theaters, to test whether the odors produced by an audience reliably indicate the levels of violence, sex, antisocial behavior, drug use, and bad language in the movie the audience is watching [26, 49, 53, 70, 71].

Experience

- enviPath Limited

- CEO & Co-Founder

- since December 2024

- University of Auckland – School of Computer Science

- Senior Lecturer

- since February 2020

- Lecturer

- August 2017 – January 2020

- enviPath UG & Co. KG

- Co-Founder

- CTO

- September 2019 – November 2025

- CEO

- January 2017 – August 2019

- Johannes Gutenberg University Mainz – Data Mining Group

- Research Associate

- November 2011 - August 2017

- Technical University of Munich – Machine Learning and Data Mining in Bioinformatics Group

- Research Associate

- July 2007 - September 2011

Education

- Technical University of Munich – PhD – Computer Science

- July 2007 - September 2013

- Ludwig Maximilian University of Munich & Technical University of Munich – Diplom (equivalent to M.Sc.) - Bioinformatics

- September 2000 - May 2007

Research visits

- Institut Jožef Stefan – Department of Knowledge Discovery Technologies

- March 2025 - November 2025

- University of Waikato – Machine Learning Group

- March 2016 - April 2016

- University of Waikato – Machine Learning Group

- August 2015

- University of Waikato – Machine Learning Group

- March 2014 - April 2014

- Institut Jožef Stefan – Department of Knowledge Discovery Technologies

- November 2009 - December 2009

Publications

Book chapters

Journal articles

Conference papers

Preprints

Dissertation

Miscellaneous

Supervision

PhD

- Lewis Msasa

- Groundwater Health Index (GHI): A Data Driven Approach to Climate-Resilient Water Management – starting in 2026

- Alexander Bikeyev

- Molecule Generation through Adversarial Learning – since 11/2025

- Junyan Zhong

- Robust Graph-Based Recommendation Systems: Analyzing Threats and Developing Countermeasures – since 03/2024

- Mark Chen

- Adversarial Attacks on Time Series – since 03/2023

- Ioannis Ziogas

- Machine Learning Models for Rare Event Data: Applications, Limitations, and Performance – since 02/2023

- Annie Lu

- Machine Learning in Longitudinal Studies – since 05/2020

- Nooriyan Poonawala-Lohani

- Predictive Analytics for Early Warning of Influenza-like Illness – since 02/2019

- Xinglong (Luke) Chang

- Adversarial Learning – 10/2019-03/2025

- Jonathan Kim

- Towards Robust Semantic Scene Understanding through Joint Optimisation of Visual SLAM and Deep Convolutional Neural Networks – 01/2019–06/2025

- Katharina Dost

- Selection Bias – Identification and Mitigation with no Ground Truth Information – 07/2019-08/2023

Co-supervisor

- Liam Brydon-Brown

- Predicting Products of Chemical Reactions – since 2023

- Qinwen Yang

- Addressing the Challenges of Knowledge Discovery Using Machine Learning Methods – since 11/2023

- Run Luo

- A Combinatorial Chemistry and Deep Learning Method for Environmental Effect Analysis of Nanopesticides – since 12/2021

- Cathy Hua

- Query-Focused, Analysis-Friendly Text Summarisation of Survey Responses – since 02/2022

- Xuan (Johnny) Zhu

- A mathematical model guided machine learning method for understanding epidemic multiple wave mechanisms – since 01/2021

- Olivier Graffeuille

- Machine Learning for Extreme Event Detection – 08/2020-08/2025

Master of Science

- Irsyaad Rijwan

- Predictiting biodegradation pathways – 2025/2026

- Asif Cheena

- Don’t Swim in Data: Real-Time Microbial Forecasting for New Zealand Recreational Waters – 2024

- Marrick Lip

- A Machine Learning Framework for the Analysis of Bat Calls – 2022

Co-supervisor

- Andrew Chester

- Detecting Bias in Machine Learning Algorithms: End to End De-identification Framework for Clinical Text – 2020

Honours

- Saurav Krishnakumar

- Evaluation of Pollutant Pathway Prediction Models – S1/S2 2025

- Vince Guan

- Designing Chemical Compounds Using Adversarial Learning – S1/S2 2025

- Sam Chen

- Adversarial Attacks on Clustering Algorithms – S2 2022/S1 2023

- Liam Brydon

- Finding Patterns in Chemical Reactions – S1/S2 2022

- Maxwell Zhu

- Machine Learning Matching Algorithms in Dating Platforms – S1/S2 2022

- Viaan Saunderson

- Adversarial Attacks on Graphs – S1/S2 2022

- Zac Pullar-Strecker

- enviPath – S1/S2 2022

- Chong Chuah

- Bias in Machine Learning –S1/S2 2022

- Mark Chen

- Adversarial Learning on Time Series – S2 2021/S1 2022

- Hamish Duncanson

- IMITATE: Identification and Mitigation of Selection Bias – S1 2021

- Milan Law

- Data Analysis of COVID-19 Data Sets – S1/S2 2020

- Kitty Li

- Mining the RDF Graph to Improve the Performance of Classifiers – S1/S2 2019

Engineering Part 4 Projects

- Emma Wang

- Streamlining adversarial machine learning on Memento – S1/S2 2024

- Lina Yuan

- Streamlining adversarial machine learning on Memento – S1/S2 2024

- Emily Zou

- Connecting Adversarial Learning and Applicability Domain in Cheminformatics – S1/S2 2024

- Lee Violet Ong

- Connecting Adversarial Learning and Applicability Domain in Cheminformatics – S1/S2 2024

- Clemen Sun

- Poison is Not Traceless: Fully-Agnostic Detection of Poisoning Attacks – S1/S2 2024

- Eugene Chua

- Poison is Not Traceless: Fully-Agnostic Detection of Poisoning Attacks – S1/S2 2024

- Hannah Zhang

- A dating platform for interpersonal relationship research – S1/S2 2023

- Lang Cheng

- A dating platform for interpersonal relationship research – S1/S2 2023

Co-supervisor

- Angela Hollings

- Ear, nose, and throat app development – S1/S2 2021

- Elizabeth Yap

- Ear, nose, and throat app development – S1/S2 2021

Masters of Data Science

- Charlie Chen

- AI and Freshwater Modelling – 07/2023-11/2023

- Tsz Fung Ip

- Finding Patterns in Recordings of Bat Calls – S2 2021/S1 2022

- Xianzhong Li

- Inference of Cluster Information based on Unlabelled Datasets – S1 2021

- Xiao Li

- Prediction and Analysis of Earthquake Mainshocks in the Ring of Fire by Machine Learning Approaches – S1 2021

- Yuanchi Ma

- Inference of Cluster Information based on Unlabelled Datasets – S1 2021

- Zhe Wu

- Aftershocks Predictions Following a Major Earthquake in the Ring of Fire Region – S1 2021

- Bruno Naveen Joswa

- Identififying and Analysing Bat Calls – S2 2020

- Mary Grace De la Pena

- Machine Learning-based Prediction of Biodegradation Persistence – S2 2020

- Josh Bensemann

- Change Mining in the Smell of Fear Data Set – S2 2019

- Owen Meyer

- Analysis of the CARIBIC Data Set – S2 2019

- Charles Tremlett

- Generating Chemical Structures and Improving Models using Reinforcement Learning – S1/S2 2019

- Catherine Liu

- Dynamic Pricing – S2 2018/S1 2019

- Loukas Lyden

- Modelling User Behaviour in Online Shopping – S2 2018/S1 2019

- Masoumeh Shariat

- Analysis of Petrol related VOCs in the CARIBIC Data Set – S2 2018/S1 2019

- Samantha Cen

- Identifying Contrails in the CARIBIC Data Set – S2 2018/S1 2019

- Ziqing Yan

- A New Field of Data Mining: Classification of Movies based on VOCs – S1 2018

CS380 projects

- Yena Ahn

- Auditing Artificial Intelligence with Adversarial Learning: Meta-Learning – S1 2023

- Jonathan Leung

- Model Response to Electroconvulsive Therapy Changes based on EEG Traces – S2 2021

- Hasnain Cheena

- Machine Learning Approaches for Mass Spectrometry Data Analysis – S1 2020

- Cathy Hua

- Machine Learning Analysis of Student Feedback – S1 2020

- Chloe Haigh

- Biodegradation Half-Life Prediction – S1 2020

- Aryan Lobie

- Weather Prediction using Deep Neural Networks – S2 2019

- Sichun (Victor) Yin

- Advanced Boolean Matrix Decomposition – S1 2018

- Tom Février

- Identifying Markers for Human Emotion in Breath Using Convolutional Autoencoders on Movie Data – S1 2018

Summer Scholarships

- Kevin Zou

- Adversarial Time Series – 2025

- Maishi Huang

- Machine Learning-based Pretiction of the Environmental Fate of Pollutants – 2025

- Mihnea Vlad

- Adversarial Time Series – 2024

- Saurav Krishnakumar

- Predicting Persistence of Environmental Pollutants – 2024

- Liam Brydon

- Finding Patterns in Chemical Reactions – 2022

- Ryan La

- Auditing Machine Learning Models: Quantifying Reliability using Adversarial Regions – 2022

- Sarah Kim

- Identifying and analysing bat calls – 2022

- Yuye Zhang

- Auditing Machine Learning Models: Quantifying Reliability using Adversarial Regions – 2022

- Zac Pullar-Strecker

- Adversarial Active Learning – 2021

- Chloe Haigh

- Privacy Defense – 2020

- Matthew Mulvey

- Machine Learning in the Analysis of Mass Spectrometry Data – 2020

- Cathy Hua

- Advanced Methods for Boolean Matrix Decomposition – 2019

- Hasnain Cheena

- The Smell of Fear – 2019

- Rayner Rebello

- Advanced Methods for Boolean Matrix Decomposition – 2019

Master of Information Technology

- Dao (Robin) Gu

- The Great Unmatched – 2023

- Qiong Zhou

- Fast & Accurate Chromosome Assembly – Streaming curation using image-based methods – 2023

- Dingguan Lyu

- Business Analyst – 2022

- Aditio Nugroho

- Proof of Concept – Salesforce Utility Cloud – 2021

- Hiu Wing Doris

- Proof of Concept – Salesforce Utility Cloud – 2021

- Shakeel Khan

- Proof of Concept – Salesforce Utility Cloud – 2021

- Shriya Sadhu

- Proof of Concept – Salesforce Utility Cloud – 2021

- Hongnan Dou

- Crash Prediction – 2020

- Jiangning Lin

- Crash Prediction – 2020

- Wenjie Xu

- Crash Prediction – 2020

- Xiangli (Ben) Cheng

- Crash Prediction – 2020

- Catherine Blandin De Chalain

- Managed Service Customer Portal – 2019

- Pradeep Kumar

- Brave New Coin – 2018

- Xeshu Shen

- AT Camera Testing – 2018

- Ning Hua

- Explainability in Medical Models – 2017

- Samil Farouqui

- Ad Bidding – 2017

Diplom (equivalent to M.Sc. – at Johannes Gutenberg University Mainz and Technical University Munich, Germany)

- Christian Sußenberger

- Predicting Toxicity of Biodegradation Products Using REST – JGU – 2014

Co-supervisor

- Katharina Dost

- Boolean Matrix Decomposition for Giant Matrices – JGU –2016

- Award for Outstanding Master's Thesis – Faculty for Physics, Mathematics, and Computer Science

- Steffen Albrecht

- Data Mining for The Cancer Genome Atlas – JGU – 2016

- Christoph Brosdau

- Service Oriented Data Mining for Biological Data – TUM – 2008

- Daniela Bieley

- Integration of String Mining in an Inductive Database – TUM – 2008

B.Sc. (equivalent to Honours – co-supervisor at Johannes Gutenberg University Mainz and Technical University of Munich, Germany)

- Steven Lang

- Predicting the Persistence of Environmental Pollutants – 2017

- Nicolas Krauter

- Understanding a Search Engine's User Categorization – 2016

- Tim Lorsbach

- Optimizing the Coherence of Binary Relevance at Prediction Time – 2016

- Konstantinos Katikakis

- An Empirical Review on Modular Boolean Matrix Decomposition – 2015

- Andrey Tyukin

- A Framework for Parallel Computation in Data Mining and its Application to Autoencoders – 2015

- Florian Seifert

- Data Mining in Medical Databases – TUM – 2012

- Sebastian Lehnerer

- Feature and Label-Selection for Multi-Label Classification – TUM – 2011

- Julian Lemke

- Data Imputation for Multi-Label Classification – TUM – 2011

Talks

Invited Talks

- Advancements in Biotransformation Pathway Prediction: Enhancements, Datasets, and Novel Functionalities in Envipath

- European Chemical Agency (2025)

- Advancements in Biotransformation Pathway Prediction: Enhancements, Datasets, and Novel Functionalities in enviPath

- Technical University of Munich (2025)

- Reliable Machine Learning – Methods and Applications in Environmental Sciences

- Institut Jozef Stefan (2025)

- Advancements in Biotransformation Pathway Prediction: Enhancements, Datasets, and Novel Functionalities in Envipath

- Boehringer Ingelheim (2025)

- Reliable Machine Learning – Methods and Applications in Environmental Sciences

- University of Stavanger (2025)

- Reliable Machine Learning – Methods and Applications in Environmental Sciences

- INESC TEC - Institute for Systems and Computer Engineering, Technology and Science, Porto (2025)

- Advancements in Biotransformation Pathway Prediction: Enhancements, Datasets, and Novel Functionalities in Envipath

- Environmental Protection Agency NZ (2024)

- Reliable Machine Learning – Methods and Applications in Environmental Sciences

- Technical University of Munich (2024)

- Reliable Machine Learning – Methods and Applications in Environmental Sciences

- RIKEN AJP (2023)

- Beyond the Smell of Fear: Data Mining for Atmospheric Chemistry

- University of Waikato (2016)

- Cinema Data Mining: The Smell of Fear

- RMIT University (2016)

- enviPath – database and prediction system for the microbial biotransformation of organic environmental contaminants

- Biochemical Pathways and Large Scale Metabolic Networks, Swiss Institute for Bioinformatic (2016)

- Cinema Data Mining: The Smell of Fear

- University of Waikato (2015)

- enviPath – Technical Aspects and New Data

- enviPath Workshop (2015)

- Large Classifier Systems in Bio- and Cheminformatics

- University of Waikato (2014)

- Machine Learning in Biodegradation Pathway Prediction

- RMIT University (2016)

- NGE-PPS: The Next-Generation Biotransformation Pathway Prediction System

- ATHENE Workshop (2014)

- Using Classifier Systems in Biodegradation Pathway Prediction and Predictive Toxicology

- Lhasa Ltd. (2014)

- SINDBAD and SiQL: an Inductive Database and Query Language in the Relational Model

- Institut Jožef Stefan (2009)

Demos

- Scavenger – A Framework for the Efficient Evaluation of Dynamic and Modular Algorithms

- ECML/PKDD (2015)

- BMaD – A Boolean Matrix Decomposition Framework

- ECML/PKDD (2014)

- An Inductive Database and Query Language in the Relational Model

- EDBT (2008)

- SINDBAD and SiQL: An Inductive Database and Query Language in the Relational Model

- ECML/PKDD (2008)

Conference Talks

- XOR-based Boolean Matrix Decomposition

- International Conference on Data Mining (2019)

- The best Privacy Defence is a good Provacy Offence – Obfuscating a Serch Engine User’s Profile

- ECML/PKDD (2017)

- A Nonlinear Label Compression and Transformation Method for Multi-Label Classification using Autoencoder

- PAKDD (2016)

- Cinema Data Mining: The Smell of Fear

- KDD (2015)

- Multi-Label Classification Using Boolean Matrix Decomposition

- ACM SAC Data Mining Track (2012)

- Predicting Biodegradation Products and Pathways: A Hybrid Knowledge-Based and Machine Learning-Based Approach

- TransCon (2010)

- SINDBAD SAILS: A Service Architecture for Inductive Learning Schemes

- First Workshop on Third Generation Data Mining: Towards Service-Oriented Knowledge Discovery (2008)

- Machine Learning and Data Mining Approaches to Biodegradation Pathway Prediction

- International Workshop on the Induction of Process Model at ECML/PKDD (2008)

Posters

- Advancements in Biotransformation Pathway Prediction: Enhancements, Datasets, and Novel Functionalities in Envipath

- Symposium on Pesticide Chemistry (2024)

- Advanced Multi-Label Classification Methods for the Prediction of ToxCast Endpoints

- OpenTox Euro Meeting (2013)

- Extending the Prediction of the Environmental Fate of Chemicals Using REST Webservices

- OpenTox Euro Meeting (2013)

- An extensive Multi-Label Analysis of the ToxCast Data Set

- OpenTox Euro Meeting (2011)

- Multi-Relational Learning by Boolean Matrix Factorization and Multi-Label Classification

- International Conference on Inductive Logic Programming (2011)

- An Extensive Multi-Label Analysis of the ToxCast Data Set

- European Symposium on Quantitative Structure-Activity Relationship (2010)

Teaching

- CS101

- Introduction to Programming

- SS 2019

- CS351

- Fundamentals of Database Systems

- S1 2018, S1 2019 (coordinator)

- CS361

- Introduction to Machine Learning

- S1 2019, S1 2020, S1 2021, S1 2023, S1 2024, S1 2025 (coordinator)

- CS361 (remotely at NEFU)

- Introduction to Machine Learning

- S1 2022

- CS751

- Advanced Topics in Database Systems

- S1 2018, S1 2019 (coordinator)

- CS760

- Data Mining and Machine Learning

- S1 2018, S2 2018 (coordinator), S1 2019, S2 2019 (coordinator), S1 2020 (coordinator), S2 2020, S2 2023

- CS762

- Advanced Machine Learning

- S1 2020, S1 2021 (coordinator), S1 2022, S1 2023, S1 2024, S1 2025 (coordinator)

- Software Engineering

- Johannes Gutenberg University

Mainz

- 2016

- Data Mining

- Johannes Gutenberg University

Mainz

- 2016

Service

Programm Committee

- since 2017

- AAAI - Conference on Artificial Intelligence

- since 2019

- ECAI - European Conference on Artificial Intelligence

- since 2013

- ECML / PKDD - European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases

- since 2020

- ICDM - International Conference on Data Mining

- since 2017

- IJCAI - International Joint Conference on Artificial Intelligence

- since 2021

- KDD - SIGKDD Conference on Knowledge Discovery and Data Mining

- since 2018

- PAKDD - Pacific-Asia Conference on Knowledge Discovery and Data Mining

Reviewing

- Atmospheric Chemistry and Physics (Copernicus Publications)

- Computers in Biology and Medicine (Elsevier)

- Conference on Computer Vision and Pattern Recognition (CVPR)

- Environmental Science: Processes & Impacts (Royal Society of Chemistry)

- Nature Communications (Nature)

- Pattern Recognition (Elsevier)

- Pattern Recognition Letters (Elsevier)

Organising Committee

Local Organizer

- MLSB – International Workshop on Machine Learning in Systems Biology – 2011

- OpenTox – Innovation in Predictive Toxicology: OpenTox InterAction Meeting – 2011

- ICDM – International Conference on Data Mining – 2021